1. Concept

|

| |

| 1.1 Parallelism and Concurrency |

| |

Parallelism: Multiple computations are done simultaneously. Parallelism: Multiple computations are done simultaneously. |

– Instruction level (pipelining)

– Data parallelism

– Task parallelism (embarrassingly parallel) |

Concurrency: Multiple computations that may be done in parallel. Concurrency: Multiple computations that may be done in parallel. |

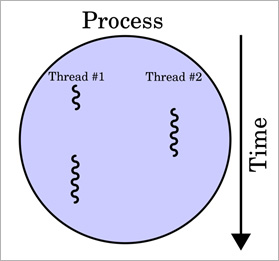

A process with two threads of execution on a single processor. A process with two threads of execution on a single processor. |

| – On a single processor, multithreading generally occurs by time-division multiplexing (as in multitasking): the processor switches between different threads. |

| |

|

| |

| 1.2 Process vs. Threads |

| |

Process: An instance of a program that is being executed in its own address space. In POSIX systems, each process maintains its own heap, stack, registers, file descriptors etc. Process: An instance of a program that is being executed in its own address space. In POSIX systems, each process maintains its own heap, stack, registers, file descriptors etc. |

| – Communication: |

Shared memory Shared memory

Network Network

Pipes, Queues Pipes, Queues |

Thread: A light weight process that shares its address space with others. In POSIX systems, each thread maintains the bare essentials: registers, stack, signals. Thread: A light weight process that shares its address space with others. In POSIX systems, each thread maintains the bare essentials: registers, stack, signals. |

| – Communication: |

shared address space. shared address space. |

Note: POSIX (Portable Operating System Interface) Note: POSIX (Portable Operating System Interface) |

| – The application programming interface (API) for the Unix operating system, which is specified by the IEEE. |

| |

Threads differ from traditional multitasking operating system processes in that: Threads differ from traditional multitasking operating system processes in that: |

– processes are typically independent, while threads exist as subsets of a process

– processes carry considerable state information, whereas multiple threads within a process share state as well as memory and other resources

– processes have separate address spaces, whereas threads share their address space

– processes interact only through system-provided inter-process communication mechanisms.

– Context switching between threads in the same process is typically faster than context switching between processes. |

| |

| 1.3 Multithreaded Concurrency |

| |

Serial execution: Serial execution: |

– All our programs so far has had a single thread of execution: main thread.

– Program exits when the main thread exits. |

Multithreaded: Multithreaded: |

– Program is organized as multiple and concurrent threads of execution.

– The main thread spawns multiple threads.

– The thread may communicate with one another.

– Advantages: |

Improves performance Improves performance

Improves responsiveness Improves responsiveness

Improves utilization Improves utilization

less overhead compared to multiple processes less overhead compared to multiple processes |

| |

| 1.4 Designing Threaded Programs |

| |

Parallel Programming Parallel Programming |

– There are many considerations for designing parallel programs, such as:

– What type of parallel programming model to use?

– Problem partitioning

– Load balancing

– Communications

– Data dependencies

– Synchronization and race conditions

– Memory issues

– I/O issues

– Program complexity

– Programmer effort/costs/time

– ... |

| |

Several common modes for threaded programs exist: Several common modes for threaded programs exist: |

– Manager/worker: a single thread, the manager assigns work to other threads, the workers. Typically, the manager handles all input and parcels out work to the other tasks. At least two forms of the manager/worker model are common: static worker pool and dynamic worker pool.

– Pipeline: a task is broken into a series of suboperations, each of which is handled in series, but concurrently, by a different thread. An automobile assembly line best describes this model.

– Peer: similar to the manager/worker model, but after the main thread creates other threads, it participates in the work. |

| |

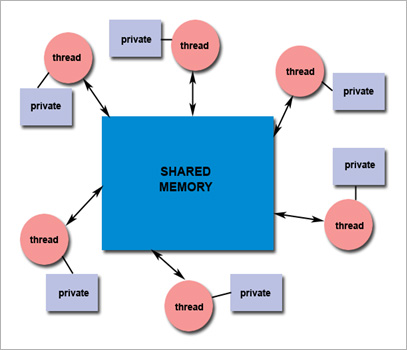

Shared Memory Model Shared Memory Model |

– All threads have access to the same global, shared memory

– Threads also have their own private data

– Programmers are responsible for synchronizing access (protecting) globally shared data.

|

| |

|

| |

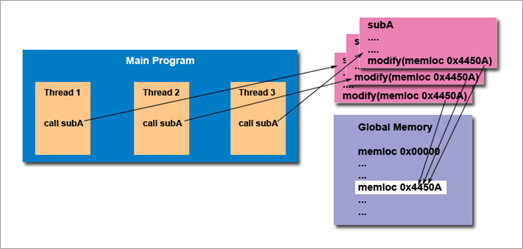

Thread-safeness: Thread-safeness: |

– Thread-safeness: in a nutshell, refers an application's ability to execute multiple threads simultaneously without "clobbering" shared data or creating "race" conditions.

– For example, suppose that your application creates several threads, each of which makes a call to the same library routine: |

This library routine accesses/modifies a global structure or location in memory. This library routine accesses/modifies a global structure or location in memory.

As each thread calls this routine it is possible that they may try to modify this global structure/memory location at the same time. As each thread calls this routine it is possible that they may try to modify this global structure/memory location at the same time.

If the routine does not employ some sort of synchronization constructs to prevent data corruption, then it is not thread-safe. If the routine does not employ some sort of synchronization constructs to prevent data corruption, then it is not thread-safe.

|

| |

|

| |

| |